The digital maps of today have expanded far beyond the flat 2D space. Images of buildings and landmarks on the street add depth and additional information for people to help them navigate.

We’ve found this technology especially relevant in parts of Southeast Asia where road labels are ambiguous, and people rely on points of interest (“Turn left at the second lamp post after the bus stop!”) to convey instructions.

In collecting street-level imagery for a map, it’s inevitable that the system will capture personal data, such as people’s faces and car plates. To protect people’s privacy, our systems are trained to recognise these elements and subtly blur them out.

The automated process is necessary as the imagery we collect grows. GrabMaps’ platform for geotagged imagery, KartaView, has public imagery in over 150 countries, including 75+ Southeast Asian cities, as of February 2023. Manually annotating the spread of photos is impractical, if not near impossible.

How the system is trained

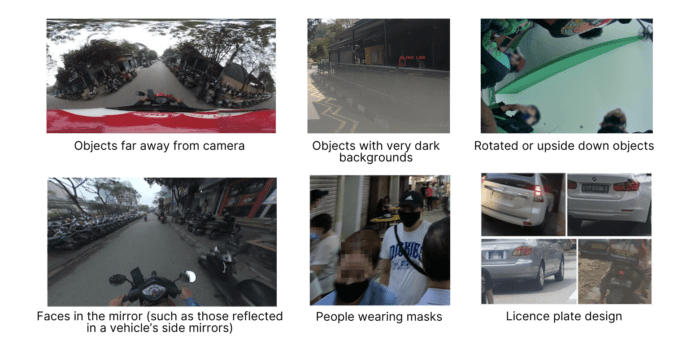

With images from multiple countries, you get a huge diversity in licence plates and human environments. These can confuse and evade off-the-shelf image recognition systems.

So we built our own custom blurring solution, which we found was more accurate and cost-efficient.

To train the machine learning model, our data tagging teams identify faces and licence plates in a selection of images. The algorithm continues to get refined as it learns from bigger and bigger datasets.

Three steps to detect and blur sensitive elements in a photo:

- Transform each picture into planar images. This refers to “flattening” an image from a 360-degree camera, for example, into a 2D version.

- Use a machine learning algorithm to detect all faces and licence plates in each image.

- Once detected, find the coordinates of these faces and licence plates within the original image and blur them accordingly.

Face masks: a huge anomaly

During the Covid-19 pandemic, our image recognition model did not always correctly identify faces that were covered by masks.

We also encountered issues with unexpected framing, especially with our images that are collected by driver-partners on the action camera KartaCam devices.

At the start or end of a session on the device, the camera operator tends to bring the device closer to them to check whether it’s recording. This resulted in close-up captures of the camera operator’s face making up the entire image—these were too large for the model to detect.

These examples meant that the data tagging team needed to go back to the images and annotate those that featured people in masks or close-ups of faces.

The team also annotated the following image types, to train the model to be able to read a wider variation of photos collected:

Better learning from data splitting

After data collection, our data goes through data splitting, where we divide it into multiple subsets.

GrabMaps images are divided in three subsets—one to train the model, another to validate that the model is working as intended, and the third to test the final version of the model.

Data splitting helps to future-proof the machine learning model. Sometimes, early iterations of a model can be too tethered to the sample data, making it hard to be as accurate with new data as it’s added in the future.

In addition to data splitting, the team applies data augmentation. For a machine learning model to be as accurate as possible, you will need a lot of data, which can be impractical or costly to obtain.

Data augmentation is a technique that artificially generates additional data from what you already have. This is often employed to make data richer and help the model perform more accurately.

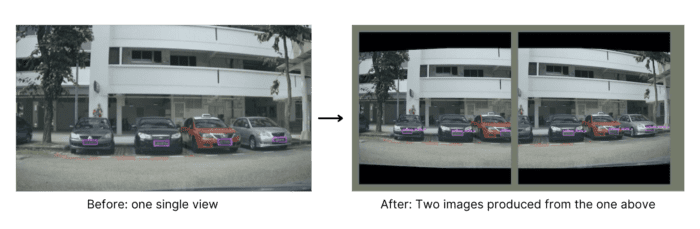

Here’s an example from our database:

By splitting the single photo into two separate images, the model benefits from extra data, without needing the team to actually take additional photos.

Other data augmentation techniques include flipping or rotating images, or if you’re working with words instead of images, to translate them.

We found that data augmentation helped to provide a good balance between speed and accuracy.

As Grab continues to expand its data collection, these techniques will help us run our detection models effectively and efficiently.

3 Media Close,

Singapore 138498

Komsan Chiyadis

GrabFood delivery-partner, Thailand

COVID-19 has dealt an unprecedented blow to the tourism industry, affecting the livelihoods of millions of workers. One of them was Komsan, an assistant chef in a luxury hotel based in the Srinakarin area.

As the number of tourists at the hotel plunged, he decided to sign up as a GrabFood delivery-partner to earn an alternative income. Soon after, the hotel ceased operations.

Komsan has viewed this change through an optimistic lens, calling it the perfect opportunity for him to embark on a fresh journey after his previous job. Aside from GrabFood deliveries, he now also picks up GrabExpress jobs. It can get tiring, having to shuttle between different locations, but Komsan finds it exciting. And mostly, he’s glad to get his income back on track.